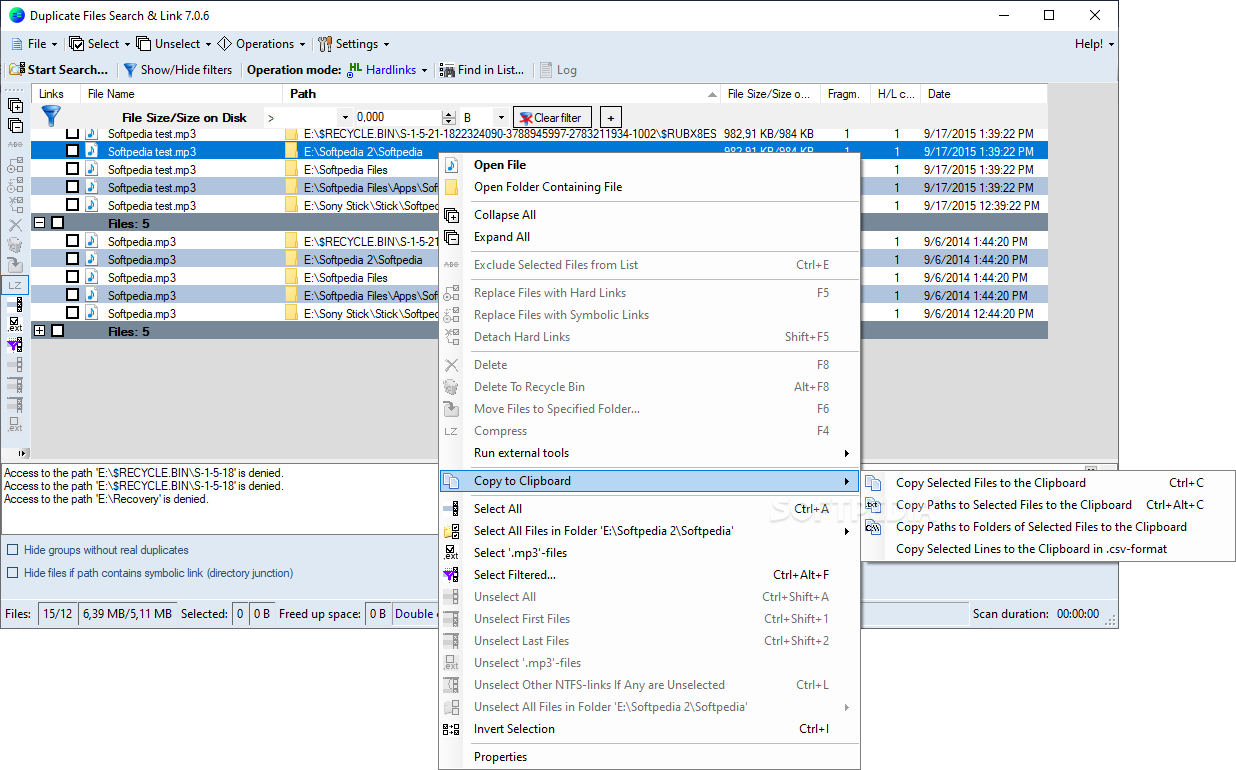

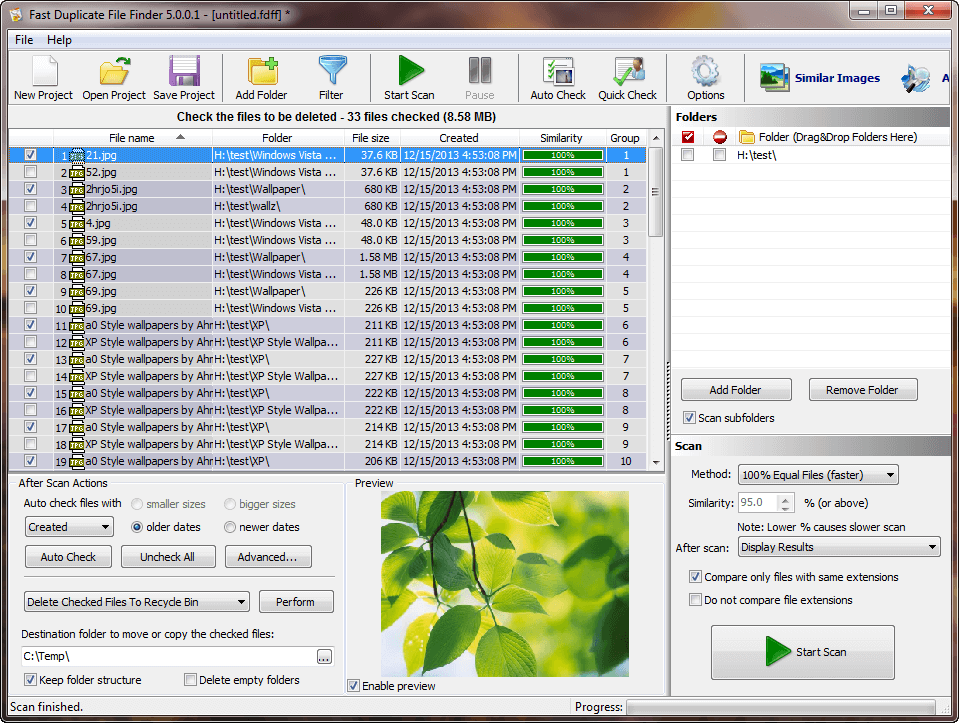

Part of the problem is hinted at here where you caution to make backups before doing anything. Moving them is the same as deleting them to software that expects to find them there. ‘Organising’ meant collecting like files together in one place. Whatever it was I know I created a real hell for myself in the past by trying to organise files and eliminate duplicates. Which can destroy all your config.txt or similar in a flash. You have to be very careful, right?īlindly eliminating duplicates can wreck everything I think I have found in the past.īut that may have been because my duplicate elimination software was too simplistic – perhaps not checking file length, calling files ‘duplicates’ merely because they had the same name. But i also know from experience it is not a simple job fixing it. I’ve got big problems with duplicate files, I know. I won’t need any other sources for what I want to know, looks like. Enough there to keep me busy for the rest of my life. | Move-Item -Destination $targetDir -Force -Verbose

| Out-GridView -Title "Select the file(s) to move to `"$targetDir`" directory." -PassThru ` Get-ChildItem -Path $srcDir -File -Recurse | Group -Property Length ` This is accomplished by the PowerShell commands below: $srcDir = "D:\ISO Files" In this way, the overall time of the command is significantly reduced. The trick is to only compute the hashes of files having the same length because we already know that files with different lengths can't be duplicates. Because the Length value is retrieved from the directory, no computation is required. You probably know the Length property of the Get-ChildItem PowerShell cmdlet. Find duplicate files based on length and hashĪ necessary condition for duplicate files is that their size must match, which means that files where the size does not match cannot be duplicates. In the next section, we further optimize this command. The command will therefore take ages to find duplicates when used against a directory with a large number of large files. The hash computation is a resource-intensive operation, and the aforementioned command computes it for each file, regardless of its size. It works well for a few small files, but you will run into trouble if the source directory contains many large files. The command has one serious flaw, though.

0 kommentar(er)

0 kommentar(er)